Detecting Insider Threats with Ethical AI

- Marketing Team

- Oct 18, 2025

- 12 min read

Updated: 5 days ago

Detecting insider threats isn't about bigger firewalls or more intrusive monitoring. It's about shifting to proactive, ethical risk management that respects human dignity. The challenge is spotting deviations in user activity—like unusual data access or logins—to flag potential risk indicators before they become costly incidents. For organizations committed to integrity and compliance, this means adopting AI that is not only powerful but also fundamentally ethical.

Logical Commander's AI-powered platform provides a solution. It allows HR, Compliance, and Security teams to gain critical visibility into human capital risks without resorting to invasive surveillance. Our platform's privacy-first design (ISO 27K, GDPR, CPRA) ensures you can act on credible risk indicators while upholding employee privacy and meeting strict regulations like EPPA. This ethical approach helps you know first, act fast, and protect your organization from the inside out.

The Hidden Risk in Today's Business

Let's be blunt: insider threats are a clear and present danger, and they're costing businesses millions every year. The move to hybrid work and the explosion of cloud tools have completely blurred the lines between safe and risky behavior, leaving organizations wide open from the inside.

This creates a serious challenge. How do you protect your most sensitive data without resorting to invasive surveillance that just destroys employee trust?

The answer is to stop reacting to damage and start defending proactively. It’s all about focusing on behavioral risk indicators. Ethical AI platforms, like those from Logical Commander, give security, HR, and compliance teams the visibility they need. Our privacy-first design means you can act on credible risks while respecting employee privacy and meeting tough regulations like GDPR and CPRA.

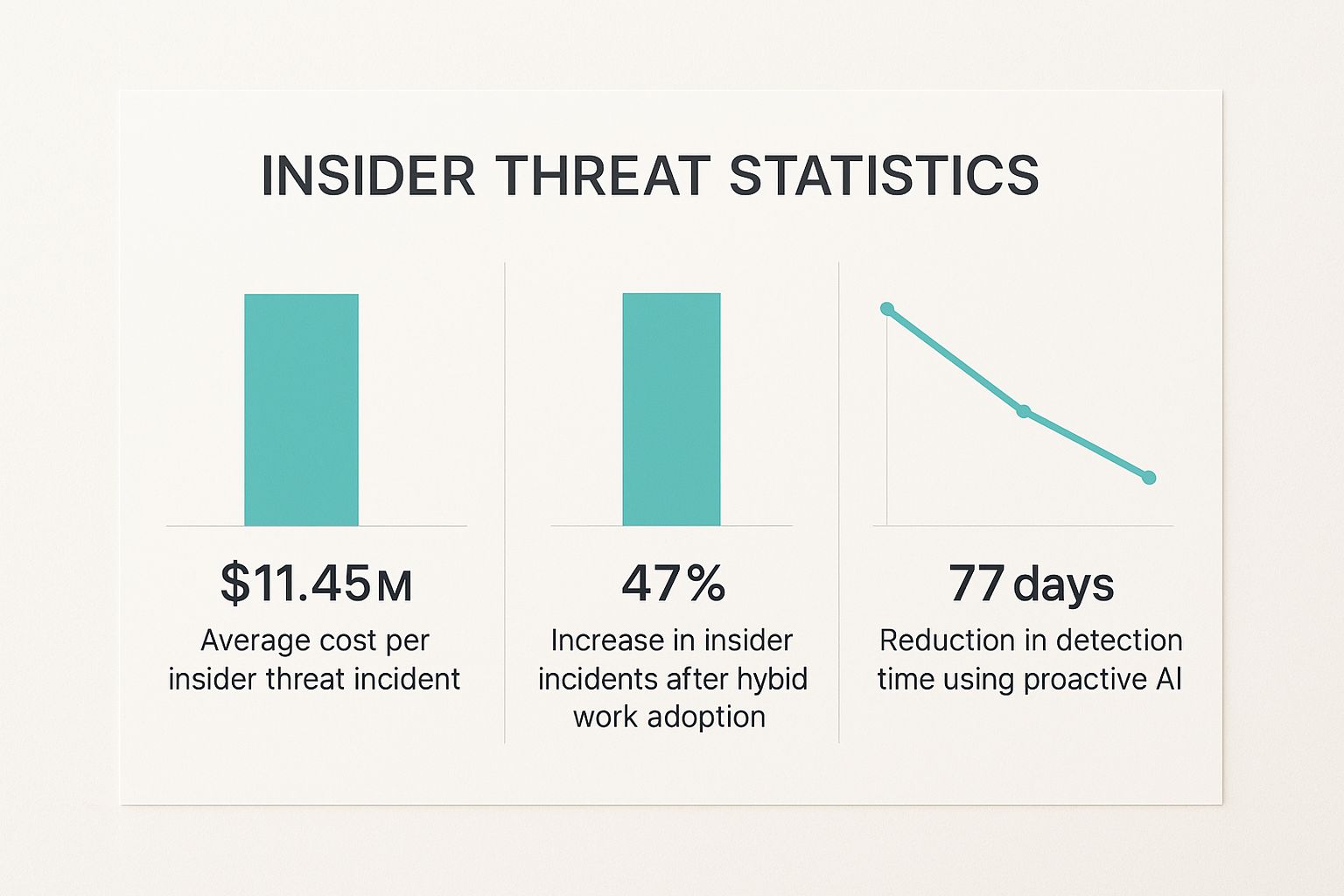

This infographic breaks down the financial impact, the rising frequency of these threats, and how much of a game-changer proactive AI can be.

The numbers don't lie. Costs are soaring, but AI-driven solutions are proven to shrink the detection window from months down to just a few days.

Real-World Scenario: A Data Breach in Plain Sight

Picture this: a trusted, long-term employee at a financial services firm is about to leave for a competitor. In her final two weeks, she begins accessing client portfolio files she hasn't looked at in years and exporting large reports to a personal cloud drive. It all happens during normal work hours, using her valid credentials, so traditional security tools see nothing wrong.

However, an ethical & non-intrusive AI system immediately flags this activity. Logical Commander's platform would recognize a major deviation from her established behavioral baseline—the sudden spike in data exfiltration and access to dormant files are clear risk indicators. This early warning gives the company a chance to intervene, preventing the loss of clients and intellectual property. Instead of discovering the breach months later, they can act fast, thanks to real-time detection.

Unifying Your Defense Strategy

You can't detect insider threats effectively if your teams are stuck in silos. Security, HR, Legal, and Compliance absolutely must work together to get a complete picture of the risk landscape.

It really comes down to a couple of key moves:

Actionable Insight 1: Build a cross-departmental incident response team. When an alert surfaces, this team can review the context together, ensuring the response is fair, compliant, and unified. This fosters the cross-department collaboration essential for modern risk management.

Actionable Insight 2: Get everyone on a centralized platform. A solution like our Risk-HR module provides a common dashboard where teams can share notes and manage cases. This eliminates crossed wires and ensures everyone works from the same playbook.

By taking an ethical, AI-powered approach, you can protect your organization's most critical assets, maintain a culture of trust, and see a real, measurable return on your investment.

**Request a demo** of E-Commander to see our privacy-first AI in action.

Understanding the Faces of Insider Threats

To build a real defense, you first have to know your enemy. Not every insider threat wears the same mask, and that's exactly why generic, one-size-fits-all security strategies are doomed from the start. The motivations, behaviors, and ultimate damage from internal risks vary wildly.

Being able to tell the difference between malicious intent and a simple mistake is the entire game when it comes to detecting insider threats and responding the right way.

The entire threat landscape has shifted inward. Insider threats are no longer a secondary concern; for many, they're the primary one. A recent study found that a staggering 64% of cybersecurity professionals now see malicious or compromised insiders as a bigger danger than external attackers. Even more telling, 42% point the finger specifically at malicious insiders.

Toss in the explosion of generative AI tools creating unauthorized backdoors for data, and you've got a perfect storm. It’s critical to understand the different personas behind these threats to build a truly resilient security posture.

The Malicious Insider

This is the classic villain—the employee who knowingly abuses their access for revenge, financial gain, or ideological reasons. Their actions are calculated and often cleverly disguised as normal work, making them incredibly difficult to spot without the right behavioral analytics.

Think about a salesperson on their way out the door. In their last two weeks, they quietly download the entire client list and proprietary sales playbooks to a personal cloud drive. Their goal is crystal clear: take your intellectual property straight to a competitor.

Key Takeaway: Malicious insiders are masters of hiding in plain sight. You won't catch them by looking for simple policy violations. The secret is to focus on behavioral anomalies—like a sudden spike in data downloads or unusual file transfers—that stand out from their normal patterns.

The Negligent Employee

Far more common than the bad actor is the accidental insider. This employee has zero ill intent. They're just human. They might fall for a sophisticated phishing email, misconfigure a cloud storage setting, or accidentally send a sensitive report to the wrong "John Smith" in their contacts.

The damage can be just as catastrophic as a malicious attack, but the solution isn't punishment—it's education and better safeguards.

A few practical tips for managing this risk:

Continuous, Context-Aware Training: Forget the once-a-year slideshow. Provide quick, relevant micro-trainings triggered by risky actions. This reinforces best practices right when they're needed most.

Build Digital Guardrails: Use technology to create safety nets. An EPPA-compliant platform can alert an employee when they're about to email sensitive data outside the company, giving them a moment to pause and confirm before a costly mistake is made. Our guide to AI-powered human risk management offers more strategies for building these safeguards.

The Compromised User

The third and final face is the compromised user. Here, the employee is an unwitting pawn. An external attacker has stolen their credentials—through malware, phishing, or a data breach—and is now using their legitimate access to move silently through your network.

To your systems, everything looks normal at first. But soon, the attacker's behavior will diverge from the employee's baseline. They might log in from a strange location, access servers at 3 a.m., or try to escalate their privileges.

To help you keep these personas straight, here’s a quick breakdown of what to look for.

Comparing the Types of Insider Threats

Threat Type | Primary Motive / Cause | Common Behavioral Indicators | Potential Impact |

|---|---|---|---|

Malicious Insider | Revenge, financial gain, ideology | Unusual data access, downloading large files, accessing systems outside of normal hours, use of unauthorized storage devices | Data theft, intellectual property loss, sabotage, reputational damage |

Negligent Employee | Human error, lack of awareness, carelessness | Clicking phishing links, misconfiguring security settings, accidental data sharing, violating data handling policies | Accidental data breach, compliance violations, operational disruption |

Compromised User | External attack, credential theft | Logins from new locations/devices, impossible travel alerts, privilege escalation attempts, unusual network activity | Widespread data breach, ransomware deployment, network compromise, financial fraud |

A nuanced, privacy-first solution is essential for telling these three threats apart. It's about analyzing behavioral metadata without ever reading private content, allowing your team to spot the subtle risk indicators that separate a malicious act from a simple mistake or a hijacked account.

This approach lets you deliver a measured response that protects the organization while respecting human dignity.

Why Traditional Security Tools Are Failing

The old security playbook is officially dead. In a world defined by remote work, dozens of SaaS apps, and personal devices connecting to corporate networks, the traditional perimeter has completely dissolved. This new reality creates dangerous blind spots that legacy tools were never built to see.

Let's be clear: firewalls, antivirus software, and basic access logs are still important. But they all work on one simple premise: keep the bad guys out. They are gatekeepers, not detectives. This entire approach crumbles when the threat is already inside, using legitimate credentials and authorized access to do harm.

The real challenge today is tracking meaningful activity across a fragmented digital environment without resorting to invasive surveillance. This is where privacy-first design becomes a critical differentiator. Modern solutions have to give you visibility while adhering to strict standards like ISO 27K, GDPR, and CPRA.

The Problem of Disconnected Data

Think about your typical employee. They’re using a dozen different platforms every day—Slack, Salesforce, Google Drive, internal servers, you name it. Each one of these systems generates its own logs, creating isolated silos of data. Your traditional security tools might see network traffic, but they can't connect the dots between an unusual login on one system and a massive data download on another.

This lack of integrated visibility is a massive vulnerability. An attacker or a malicious insider can easily exploit these gaps, moving laterally across your systems without ever triggering a single alarm. To catch these sophisticated threats, you need a unified view that correlates behavior across all platforms.

The stats paint a stark picture of a growing crisis. Between 2020 and 2022, we saw a 44% jump in insider threat-related incidents. As of 2022, 67% of companies were dealing with 21 to 40 of these incidents every single year. The shift to remote work has made it even tougher, with 71% of organizations saying it's harder to monitor employee activities. You can dig into more of this data on the challenges of detecting these internal risks on StationX.

A Real-World Failure Scenario

Imagine a project manager at a manufacturing company. Over a weekend, they log into the company's cloud server and download sensitive CAD files for a new product. On Monday, they email a compressed folder to their personal email with the subject "Vacation Photos."

What do the old tools see?

The firewall sees approved remote access.

The email gateway scans for malware and finds none.

The cloud server logs a legitimate download by an authorized user.

Each tool sees a single, isolated event and gives it a green light. Not a single one has the context to understand that these actions, when pieced together, represent a clear-cut case of intellectual property theft. This exact scenario plays out every day, costing companies millions. The tools failed because they were only looking for known threats and policy violations, not analyzing the nuances of human behavior.

Shifting Focus from Rules to Behavior

The only effective way forward is to move from a rules-based to a behavior-based approach. Instead of just asking, "Is this user allowed to do this?" we have to start asking, "Is this user's activity normal for them?" This is the core principle behind ethical and non-intrusive AI.

Actionable Insight: Conduct a "visibility audit" of your own organization. Map out where your most critical data lives and list the tools you currently use to monitor access to it. This simple exercise will quickly reveal the blind spots that a modern, integrated platform is designed to close.

A platform like E-Commander establishes a baseline of typical behavior for each user and then looks for meaningful deviations. It doesn't need to read the content of emails or files; it analyzes metadata—who accessed what, from where, at what time, and how much data was involved. This allows for real-time detection of risk indicators while fully respecting EPPA guidelines and employee privacy.

By focusing on behavioral analytics, you can finally see the complete picture that your traditional tools are missing, enabling your team to respond to genuine threats swiftly and effectively.

Building a Defense with Ethical AI

Spotting a problem is one thing; building a real solution to stop it is what actually protects your organization. It’s time to get ahead of the curve and build a proactive defense against internal risks using ethical, non-intrusive AI. This is how you effectively detect insider threats without making your team feel like they’re constantly being watched.

The whole approach hinges on analyzing behavioral metadata—not the content of communications. A platform like Logical Commander’s E-Commander zeros in on the "who, what, when, and where" of digital activity. It looks at file access times, communication patterns, login locations, and data transfer volumes without ever reading a private message or document.

This privacy-first design is everything. It allows the system to spot deviations from normal behavior that signal risk, all while respecting employee privacy and staying fully aligned with strict EPPA guidelines.

Putting Ethical AI into Practice

Let's walk through a real-world scenario. You have an engineer at a tech firm who consistently works nine-to-five, accessing project blueprints and source code from the corporate network. Then, one weekend, the system flags a few anomalies.

The engineer is logging in at 3 a.m. from an unrecognized IP address and starts downloading entire project folders to an external drive. On their own, any one of these actions might be brushed off. But when they happen together, they paint a picture of a major deviation from their established behavioral baseline.

Logical Commander's AI doesn't see "bad" behavior; it sees an anomaly that needs a closer look. This alert gives the security team a chance to investigate right away, stopping potential IP theft before the damage is done. That’s the power of real-time detection. To get a deeper dive on this tech, check out our guide to AI-powered human risk management.

Fostering Cross-Department Collaboration

Technology alone isn't a silver bullet. Truly effective insider risk detection requires a unified response from multiple departments. AI alerts are just signals; interpreting them correctly requires context from across the organization.

A strong defense brings together the key stakeholders—legal, HR, and security—who can assess an alert from every angle. This is absolutely critical for a fair and compliant process.

To make this happen, here are a few practical steps:

Actionable Insight 1: Create a Unified Response Team. Incident response can't live solely in the IT or security department. You need a cross-functional team with members from HR, Legal, and Security to ensure every alert is evaluated holistically, balancing security needs with employee rights.

Actionable Insight 2: Centralize Your Workflow. Give this team a shared platform to view alerts, share notes, and manage case files. A tool like our Risk-HR solution provides a central hub that breaks down information silos and gets everyone working from the same playbook.

This kind of collaborative structure, powered by non-intrusive AI, is what separates a modern insider risk program from the rest. It creates a system that not only flags risks effectively but is also fair, transparent, and respectful of every individual. Our partner ecosystem for global coverage allows advisory firms to help their clients implement these advanced strategies by joining our PartnerLC network.

The High Cost of Ignoring Internal Risks

Ignoring an internal risk isn’t just a security blind spot; it's a financial time bomb waiting to go off. The fallout from a single incident goes far beyond the initial cleanup, sending shockwaves through every part of the business. The message here is simple: proactive detection isn't just another line item on the budget—it's one of the smartest investments you can make.

The numbers are staggering and they keep climbing. Recent findings from the 2025 Ponemon Cost of Insider Risks Report show the average annual cost has ballooned to $17.4 million, a significant jump from $16.2 million in 2023. What’s driving this spike? Escalating containment and response expenses, which highlights just how much financial pressure these events put on a company. You can find more details on these critical cost findings on dtexsystems.com.

But the damage isn't just about the money you lose directly. It extends to severe reputational harm and legal penalties, which is why understanding the complexities of data breaches is non-negotiable. When customer trust evaporates, the long-term hit to your revenue can make even the biggest regulatory fines look small.

From Months of Damage to Days of Defense

The single most critical factor in controlling these costs is time.

The longer a threat flies under the radar, the more damage it does. It's a simple equation. Traditional security methods often take months to uncover an incident, but by that point, the data is long gone and the financial bleeding is severe.

This is where a strategy of real-time detection completely rewrites the rules. By spotting risk indicators the moment they happen, an ethical AI platform can shrink the containment window from months down to just a few days. Cutting that timeline doesn't just save money; it can save the business itself.

Actionable Insight: Go ahead and calculate the potential cost of a single, realistic data breach at your organization. Factor in everything: investigation hours, legal fees, potential fines (think GDPR or CPRA), and customer notification costs. That number? It becomes a powerful tool for building a business case with leadership for a modern insider risk program.

Proving ROI with Governance-Grade Reporting

Justifying a security budget often feels like an uphill battle. Leadership wants to see clear, measurable ROI, something that’s nearly impossible to deliver with legacy tools that only report on what already went wrong. This is where a modern platform changes the conversation entirely.

Logical Commander's E-Commander platform delivers governance-grade reporting that translates complex risk signals into clear financial metrics. The dashboards provide the hard data needed to show exactly how proactive detection is actively preventing costly incidents from ever happening.

Our Risk-HR solution takes it a step further, turning the abstract concept of human capital risk into a manageable and measurable metric. This gives HR, Compliance, and Security teams the power to show leadership precisely how the investment in ethical AI is protecting the company’s bottom line. When you can connect your security posture directly to financial health, getting executive buy-in becomes a whole lot easier.

A Final Thought on Proactive Risk Management

Protecting your company from internal risks isn't about more surveillance—it's about shifting your entire strategy to be more proactive, human-centric, and privacy-first. For most leaders, this journey starts with understanding the nuances of tracking versus measuring digital activity. We've walked through why old methods are failing and how ethical AI gives you the early warnings you need to stop major incidents before they hit your bottom line or your reputation.

Logical Commander is more than just a tool; we're a partner in building a more resilient and ethical organization. By focusing on behavioral risk indicators while championing cross-department collaboration, our platform delivers real-time detection with a measurable ROI. You can see exactly how this works in our case study on detecting internal fraud in 48 hours.

Ready to see how our E-Commander platform can transform your approach to internal risk?

**Request a demo** to experience our ethical, EPPA-compliant AI for yourself.

Know First. Act Fast. Ethical AI for Integrity, Compliance, and Human Dignity.

%20(2)_edited.png)